AI Perspectives #24

The European Union: Humanity's Last Hope for Global AI Governance

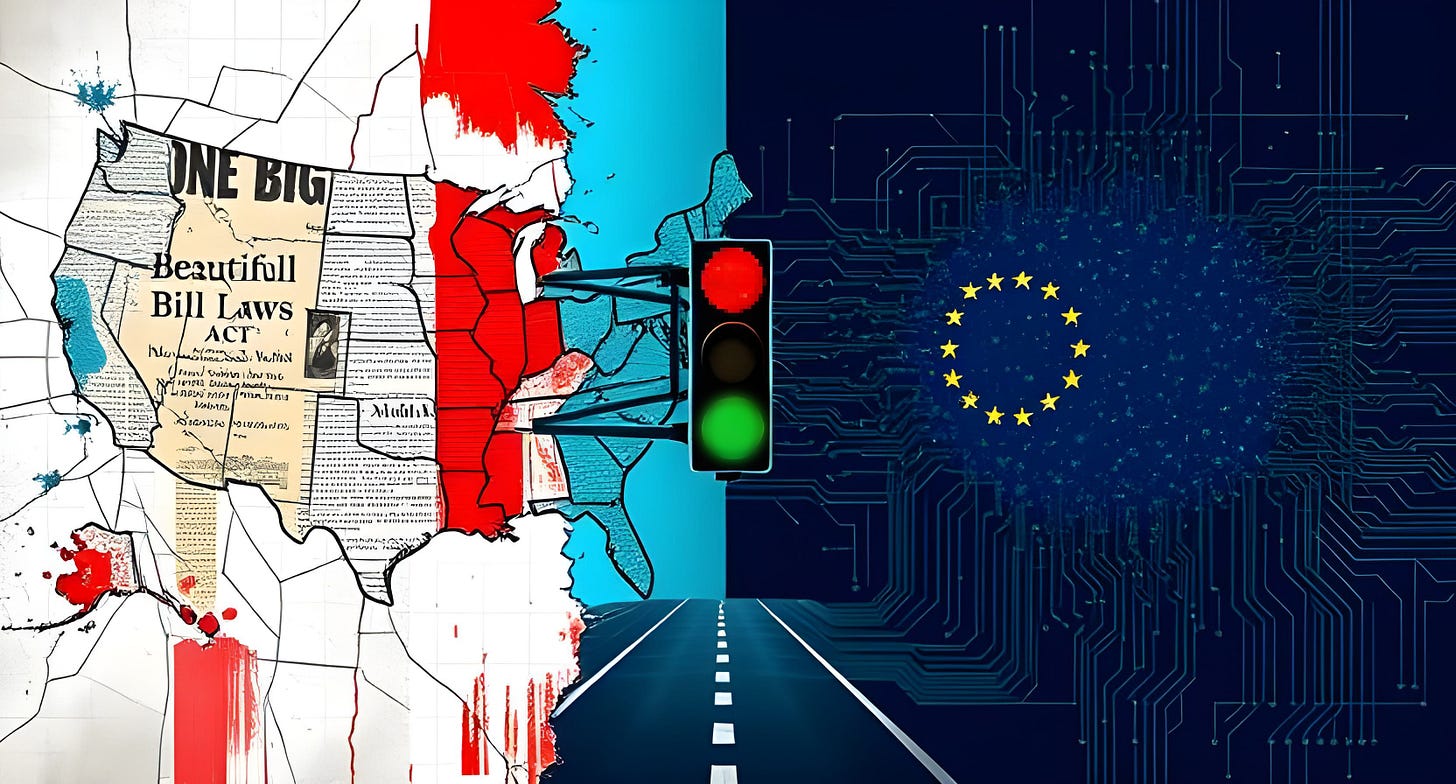

The world stands at a critical juncture in artificial intelligence governance, where the trajectory of regulatory frameworks will determine whether AI serves humanity's interests or becomes an unchecked force that undermines democratic values and human rights. As the United States retreats into regulatory uncertainty and policy reversals, the European Union emerges as the final beacon of hope for establishing comprehensive, stable, and globally influential AI governance that can protect not only European citizens but people worldwide through the powerful mechanism of regulatory spillover effects.

The Collapse of American AI Regulatory Leadership

Policy Instability and Administrative Reversals

The United States has demonstrated a troubling pattern of regulatory instability that renders it unreliable as a global leader in AI governance. The transition from the Biden to Trump administration has resulted in sweeping reversals of AI policy, with President Trump's Executive Order "Removing Barriers to American Leadership in Artificial Intelligence" systematically dismantling previous regulatory frameworks. This executive order revoked Biden's comprehensive Executive Order 14110, which was considered the most comprehensive piece of AI governance by the United States. The most concerning development illustrating American regulatory unreliability is the passage of the "One Big Beautiful Bill Act" (OBBBA) by the House of Representatives, which includes a provision imposing a 10-year moratorium on state and local AI regulations. This legislation would prohibit enforcement of any state or local law "limiting, restricting, or otherwise regulating" AI models, AI systems, or automated decision systems, effectively creating a regulatory vacuum at precisely the moment when AI governance is most urgently needed.

The Fragmentation of American AI Governance

The American approach to AI regulation exemplifies the dangers of fragmented, inconsistent policymaking. While some states like Colorado have enacted comprehensive AI legislation, the proposed federal moratorium would override these efforts, creating a patchwork of conflicting authorities and regulatory gaps. This fragmentation is further complicated by the Trump administration's emphasis on voluntary industry guidelines rather than mandatory compliance frameworks, effectively allowing the technology industry to self-regulate in areas where public oversight is essential.

The consequences of this regulatory retreat extend beyond American borders, as the absence of stable U.S. leadership creates a global governance vacuum that threatens to leave AI development largely unregulated. This situation becomes particularly alarming when considering the rapid pace of AI advancement and the potential for irreversible societal harm if regulatory frameworks fail to keep pace with technological development.

The European Union's Comprehensive Response

The EU AI Act: A Global Regulatory Blueprint

In contrast to American instability, the European Union has demonstrated an unwavering commitment to comprehensive AI governance through the EU AI Act, which entered into force on August 1, 2024. This landmark legislation represents the world's first comprehensive legal framework for artificial intelligence, establishing clear rules for responsible AI development and deployment across all member states. The AI Act's risk-based approach provides a coherent framework for addressing AI challenges across different sectors and applications. By classifying AI systems into four risk categories (unacceptable, high, limited, and minimal risk), the legislation creates clear compliance pathways while ensuring proportionate regulation that does not stifle innovation. High-risk AI systems face strict obligations, including risk assessment, data governance, documentation requirements, and human oversight measures.

Harmonized Standards Across 27 Member States

Unlike the fragmented American approach, the EU AI Act creates uniform standards across all 27 member states, eliminating regulatory arbitrage and providing clear guidance for companies operating in the European market. This harmonization represents a significant achievement in multilateral governance, demonstrating that democratic societies can reach consensus on complex technological issues when guided by shared values of human rights, safety, and transparency.

The implementation timeline reflects careful planning and stakeholder engagement, with phased rollouts beginning with prohibitions on unacceptable risk systems in February 2025, followed by general-purpose AI model obligations in August 2025, and full high-risk system requirements by August 2026. This structured approach provides businesses with clear deadlines while ensuring adequate time for compliance preparation.

The Brussels Effect: How EU Regulation Protects the World

The Mechanism of Global Regulatory Influence

The European Union's regulatory influence extends far beyond its borders through the well-documented "Brussels Effect," whereby EU standards become de facto global standards due to market forces and corporate compliance strategies. This phenomenon occurs because multinational companies find it more economical to adopt EU standards globally rather than maintaining separate production lines and compliance systems for different markets. The Brussels Effect operates through several key mechanisms that make EU regulations globally influential. First, the EU's large market size creates strong incentives for companies to comply with EU standards to access European consumers. Second, the EU's regulatory capacity and institutional expertise enable it to develop comprehensive, technically sophisticated standards that often become industry best practices. Third, the EU's stringent standards typically exceed those of other jurisdictions, creating a "race to the top" dynamic where companies adopt the highest available standard globally.

Historical Evidence: GDPR's Global Impact

The General Data Protection Regulation (GDPR) provides compelling evidence of how EU regulations reshape global practices. Since GDPR's implementation in 2018, countries worldwide have adopted similar data protection frameworks, with over 120 countries now having comprehensive data privacy laws influenced by European standards. Major technology companies including Apple, Google, and Microsoft have extended GDPR-level protections to users globally, demonstrating how EU regulations become universal corporate policies. The USB-C common charger directive offers another clear example of the Brussels Effect in action. Apple's decision to adopt USB-C globally for its iPhone 15 and 16 models, rather than maintaining different charging standards for different markets, illustrates how EU regulations drive worldwide standardization. This decision affects consumers globally, as Apple found it impractical to maintain separate production lines for different regions.

AI Regulation and Corporate Compliance Strategies

The same dynamic that drove global GDPR adoption will apply to EU AI regulations, as companies find it economically unfeasible to maintain separate AI systems and governance frameworks for different markets. Medium and large companies lacking the resources of tech giants like Google and Microsoft will adopt EU AI Act standards globally rather than developing region-specific compliance systems.

This global adoption will be particularly pronounced for AI systems embedded in consumer products, where differentiation by market would require separate development, testing, and manufacturing processes. Companies developing AI-powered healthcare devices, autonomous vehicles, or financial services applications will find it more practical to meet EU standards globally rather than managing multiple compliance frameworks.

AI Regulation as Traffic Regulation: Protecting Society, Not Limiting Innovation

The Automotive Industry Analogy

Critics of AI regulation often argue that governance frameworks will stifle innovation and technological progress, but this concern reflects a fundamental misunderstanding of how effective regulation operates. The automotive industry provides an instructive parallel: traffic regulations do not limit car manufacturers' ability to innovate in engine design, safety features, or performance capabilities. Instead, traffic rules govern how vehicles are used in public spaces to protect drivers, passengers, and pedestrians. Similarly, AI regulation should focus on governing the deployment and use of AI systems rather than restricting the underlying technological development. Just as traffic regulations require vehicles to meet safety standards, have functioning brakes, and display proper lighting without dictating engine specifications, AI regulations should establish safety, transparency, and accountability requirements without prescribing specific technical architectures.

Innovation Within Regulatory Frameworks

The automotive industry demonstrates that innovation thrives within well-designed regulatory frameworks. Safety regulations have driven advances in vehicle design, from airbags and anti-lock braking systems to electronic stability control and collision avoidance technology. Environmental regulations have spurred innovation in fuel efficiency, emissions control, and electric vehicle development.

The same principle applies to AI regulation, where governance requirements for transparency, fairness, and human oversight can drive innovation in explainable AI, bias detection, and human-AI interface design. Rather than constraining technological progress, thoughtful regulation creates market incentives for developing more robust, reliable, and socially beneficial AI systems.

Sweden's Total Governance Model: A Framework for the Future

Beyond Traditional Regulatory Approaches

The rapidly evolving nature of AI technology requires governance frameworks that can adapt to technological change while maintaining core principles of safety, transparency, and accountability. Traditional bureaucratic processes, designed for stable industries with well-understood risks, prove inadequate for governing emerging technologies characterized by rapid development cycles and uncertain long-term implications. Sweden's Total Governance (TG) Model represents an innovative approach to AI governance that addresses these challenges through a comprehensive framework emphasizing transparency, accountability, fairness, and adaptability. This model, developed by the Swedish AI Association, draws on Sweden's tradition of consensus-building, transparency, and collective responsibility to create governance structures capable of keeping pace with technological advancement.

Core Components of the TG Model

The Total Governance Model operates through four interconnected principles that create a comprehensive governance framework. Transparency requirements ensure that AI systems can explain their decision-making processes and provide clear information about their capabilities and limitations. Accountability mechanisms establish clear responsibility chains for AI system outcomes, with auditable processes and designated responsible parties.

Fairness principles prevent discrimination and bias in AI applications, requiring regular testing and validation to ensure equitable treatment across different populations. Adaptability ensures that governance frameworks can evolve with technological advancement, incorporating new understanding of AI capabilities and risks without requiring complete regulatory overhauls.

The TG Mark: Certification for Trustworthy AI

The TG Model includes a certification mechanism, the TG Mark, which provides public validation that AI systems meet governance standards. This certification system creates market incentives for responsible AI development while providing consumers, businesses, and policymakers with clear indicators of trustworthy AI systems. The TG Mark represents a practical implementation of governance principles that can be adopted across different sectors and jurisdictions.

The Path Forward: EU Leadership in Global AI Governance

Sweden's Contribution to European Leadership

Sweden's development of the Total Governance Model positions the European Union to lead global AI governance through practical, implementable frameworks that balance innovation with protection. The Swedish AI Commission's 75 policy recommendations, presented to the government in December 2024, demonstrate the country's commitment to comprehensive AI governance that can serve as a model for other nations.

Sweden's approach emphasizes community engagement and public dialogue, ensuring that AI governance reflects democratic values and citizen concerns rather than solely technical or commercial considerations. This inclusive approach to governance development strengthens the legitimacy and effectiveness of regulatory frameworks while building public trust in AI systems.

The EU as Global Standard-Setter

The combination of the EU AI Act's comprehensive regulatory framework and Sweden's Total Governance Model creates an unprecedented opportunity for European leadership in global AI governance. As American regulatory leadership falters and other major powers focus primarily on economic competition rather than governance, the EU emerges as the primary advocate for human-centered AI development that prioritizes safety, transparency, and democratic values.

The EU's approach to AI governance reflects broader European commitments to human rights, democratic governance, and social responsibility that distinguish it from purely market-driven or state-controlled approaches to technology regulation. This value-based approach to governance provides a foundation for global standards that can protect human welfare while enabling beneficial AI innovation.

Global Implications and Responsibilities

The European Union's role as the last reliable advocate for comprehensive AI governance carries profound responsibilities for global welfare. The decisions made in Brussels and Stockholm over the next few years will determine whether humanity develops governance frameworks capable of managing AI's transformative power or faces a future where technological advancement proceeds without adequate safeguards.

The Brussels Effect ensures that EU decisions will influence global practices regardless of whether other governments adopt similar regulations. This reality places a special obligation on European policymakers to consider global implications when designing AI governance frameworks, recognizing that their decisions will affect people worldwide.

The stakes could not be higher. As artificial intelligence becomes increasingly integrated into critical systems affecting healthcare, transportation, finance, and governance, the absence of effective regulation risks catastrophic failures that could undermine public trust in technology and democratic institutions. The European Union's commitment to comprehensive, value-based AI governance represents humanity's best hope for navigating this technological transition while preserving human agency, democratic values, and social welfare.

The path forward requires sustained political will, continued innovation in governance frameworks, and recognition that the EU's regulatory leadership serves not only European interests but global human welfare. Sweden's Total Governance Model provides the practical tools needed to implement this vision, while the EU AI Act creates the legal foundation for worldwide adoption. Together, they offer a blueprint for governing artificial intelligence in the service of human flourishing rather than mere technological advancement.